8 February 2025

Role mining is a technique for finding a minimal set of roles that map users to access permissions.

A typical application of role mining starts with mapping IT access permissions for many users to many folders. The objective is then to create the fewest roles possible that cover the existing access permissions for all users. Variations may allow permissions to be added or removed.

Role mining is a hard problem to solve. The academic literature describes several mathematical programming formulations, which perform OK for very small data sets but don't perform well for larger data sets. Consequently, most of the literature is focussed on using heuristics to find approximate solutions for larger role mining problems.

In this article, we implement a recently published role mining model. The paper's authors claim that their new model formulation is both tractable and practical, allowing real world data sets to be solved in a reasonable amount of time. Like the authors of that paper, we build both constraint programming (CP) and mixed integer linear programming (MILP) models, then compare their relative performance while solving several examples.

Download the models

The models described in this article are built in Python, using the Pyomo and OR-Tools libraries.

The files are available on GitHub.

Situation

We have users who have permission to access some folders on shared network drives. Currently, the folders that a user is permitted to access are defined individually for each user. We want to move our access control to a scheme based on roles, where each role grants access to specific folders and a user is assigned one or more roles.

The process of identifying a set of roles and assigning roles to users is called "role mining". A recent paper on the topic notes that:

Role mining is known to be hard in general and exact solutions are often impossible to obtain, so there exists an extensive literature on variants of the role mining problem that seek to find approximate solutions and algorithms that use heuristics to find reasonable solutions efficiently.

... In this paper, we first introduce the Generalized Noise Role Mining problem (GNRM) – a generalization of the MinNoise Role Mining problem – which we believe has considerable practical relevance.

Crampton et al. (2024). Bi-objective optimization in role mining

We implement CP-SAT and Pyomo models of Crampton et al.'s GNRM formulation, then apply the models to several examples to see how they perform.

Small example

The purpose of a role mining model is to take a matrix of user-permissions and derive two equivalent matrices: role-permission and user-role. Typically, the objective is to find the minimum number of roles that exactly cover the existing user-permissions, though many variations exist in the literature.

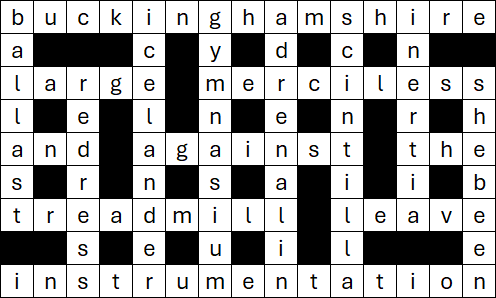

To illustrate role mining, Figure 1 shows a matrix of 30 Users and 20 Permissions. In this example, all users have Permission 1. User 1 has Permissions 1 to 12 and 19 to 20. We can readily see some patterns, such as some permissions that are grouped together into blocks for some users – for example, 12 users have Permissions 13 to 15. However, discerning a minimal set of roles that cover all existing user-permissions is not an easy task, especially for a large matrix.

Using either of the models discussed below, Figure 2 shows a minimal set of 7 roles. That is, instead of every user having unique permissions, we need only 7 roles to cover all 20 permissions assigned to the 30 users.

Since every user has Permission 1, it might make sense to have a Role that consists of just Permission 1. But that would require 8 roles, while our goal in this example is to find the minimum number of roles that exactly cover all existing user-permissions. Consequently, the model includes Permission 1 in all the roles.

Figure 3 shows an assignment of the 30 users to the 7 roles such that we achieve the equivalent of the existing user-permissions shown in Figure 1. For example, User 1 has Roles 2, 3, 4, 6, and 7. In aggregate, these roles give User 1 the Permissions 1 to 12 and 19 to 20 – as they currently have. Note that a user may be assigned a permission by more than one role.

Model building would be easier if we could start with published code and data

In our article Academics, please publish your data and code, we implore academic researchers to publish data and code to accompany their papers. Crampton et al. have not published their code, as far as we know. Therefore, we need to build our models using only the mathematical formulation in their paper. Fortunately, the paper specifies the formulation in a way that is reasonably easy to understand. Often that is not true.

The paper also does not include any data, even though the author's present results for three test cases: Domino, Firewall 2, and Healthcare. Looking at the literature, these three cases are mentioned in several other academic papers – though none seem to make the data available online. However, tracing the source of those data sets, we found the original data via the Internet Archive on a defunct webpage for Robert Schreiber, who appears to have originally collated the data sets for the paper Fast exact and heuristic methods for role minimization problems published in 2008. We make those data sets available – in their original form and translated to work with our models – in our GitHub repository.

Our model is built to use a data format that is commonly used in this domain, as specified at the library of benchmarks for the Role Mining Problem (RMP). For example, Figure 4 shows a small set of data where each row specifies a user identifier (e.g. "u0") and the permissions that user currently has (e.g. "p0" and "p1"). The model uses this data to build the user-permissions binary matrix.

u0 p0 p1 u1 p0 p1 p9 p10 u2 p0 p2 p7 p8 p9 p10 u3 p0 p2 p11 p12 p13 u4 p0 p3 p4 p5 p6 u5 p0 p2 u6 p0 p1 p2 u7 p0 p1 p2 p7 p8 p9 p10

GNRM formulation

The Generalized Noise Role Mining problem (GNRM) formulation from Crampton et al. is specified in Figure 5.

In this formulation, we specify the number of roles NUM_R to create, then find the best fit of role-permissions and user-roles. When making the fit, we specify whether the model may add permissions to a user \(u\), remove a permission \(p\), or both. That is, we have three types of permission changes defined via variations of \(\text{f}_{u,p}\) such that:

- Security, \(\text{f}_{u,p} = \text{access}_{u,p}\). The new roles may remove a permission from a user but can never add a new permission, where \(\text{access}_{u,p}\) is the current user-permission matrix.

- Availability, \(\text{f}_{u,p} = 1 - \text{access}_{u,p}\). The new roles may add a permission to a user but can never remove an existing permission.

- Noise, \(\text{f}_{u,p} = 1\). The new roles may both remove and add permissions from users.

The objective is to minimize the number of discrepancies between the current user-permissions and the user permissions implied by the combination of role-permissions and user-roles. Crampton et al. claim that their model can find good or optimal solutions for practical data sets provided the number of roles plus discrepancies is relatively small.

The constraints are defined in blocks that depend on the value of \(\text{f}_{u,p}\) and the current user-permissions matrix \(\text{access}_{u,p}\). By running the model with different values for the number of roles, we can find the minimum number of roles to exactly cover the current user-permissions, or we can find a trade-off with an acceptable number of discrepancies.

Note that we have translated the symbols and subscripts from Crampton et al. to use names that we find easier to understand. For consistency, we use the same equation numbering for the objective function and constraints, starting at (2).

Implementation

Mixed Integer Linear Programming vs Constraint Programming

Crampton et al. experiment with using both Constraint Programming (CP) and Mixed Integer Linear Programming (MILP) to solve their formulation. They conclude that MILP, using the Gurobi solver, is substantially faster than CP. We also build MILP and CP versions, to see how they perform with the example data sets.

Model 1: Mixed Integer Linear Programming using Pyomo

We start by building a Pyomo model of the formulation, as shown in Figure 6. This model is a direct translation of the formulation, except that we've added two features:

- Weighted objective function, allowing us to explore alternative solutions via different weights on the number of discrepancies, definitions and assignments. We focus on minimizing just the number of discrepancies, but other objectives might be interesting.

- A constraint that requires every user to have at least one role. Otherwise, if the model may remove permissions, then the model may assign a user no permissions. We generally disable this constraint, but it might be important in some situations.

weights = [1, 0, 0]

model.obj = Objective(

expr=weights[0] * sum(model.discrepancy[u, p] for u in model.U for p in model.P) + \

weights[1] * sum(model.definition[r, p] for r in model.R for p in model.P) + \

weights[2] * sum(model.assignment[u, r] for u in model.U for r in model.R), sense=minimize)

model.constraints = ConstraintList()

def add_constraints(model, u, p, r):

if ENFORCE_ASSIGN:

model.constraints.add(sum(model.assignment[u, r] for r in model.R) >= 1) # Every user must have at least one role

if math.isclose(f_matrix[u, p], 0) and math.isclose(access[u, p], 0):

model.constraints.add(model.assignment[u, r] + model.definition[r, p] <= 1) # Constraint (3)

elif math.isclose(f_matrix[u, p], 0) and math.isclose(access[u, p], 1):

model.constraints.add(model.auxiliary[u, p, r] <= model.assignment[u, r]) # Constraint (4)

model.constraints.add(model.auxiliary[u, p, r] <= model.definition[r, p]) # Constraint (5)

model.constraints.add(sum(model.auxiliary[u, p, r] for r in model.R) >= 1) # Constraint (6)

elif math.isclose(f_matrix[u, p], 1) and math.isclose(access[u, p], 0):

model.constraints.add(model.assignment[u, r] + model.definition[r, p] <= 1 + model.discrepancy[u, p]) # Constraint (7)

else:

model.constraints.add(model.assignment[u, r] >= model.auxiliary[u, p, r]) # Constraint (8)

model.constraints.add(model.definition[r, p] >= model.auxiliary[u, p, r]) # Constraint (9)

model.constraints.add(sum(model.auxiliary[u, p, r] for r in model.R) >= 1 - model.discrepancy[u, p]) # Constraint (10)

for u in model.U:

for p in model.P:

for r in model.R:

add_constraints(model, u, p, r)Model 2: Constraint Programming using CP-SAT

A CP-SAT implementation of the model is shown in Figure 7. This is a direct translation of the Pyomo model. The rest of the code, including printing results and displaying plots of the matrices is mostly the same (differing only where necessary to accommodate the CP-SAT or Pyomo features).

weights = [1, 0, 0]

model.Minimize(weights[0] * sum(discrepancy[u, p] for u in range(num_u) for p in range(num_p)) \

+ weights[1] * sum(definition[r, p] for r in range(NUM_R) for p in range(num_p)) \

+ weights[2] * sum(assignment[u, r] for u in range(num_u) for r in range(NUM_R)))

for u in range(num_u):

for p in range(num_p):

for r in range(NUM_R):

if ENFORCE_ASSIGN:

model.Add(sum(assignment[u, r] for r in range(NUM_R)) >= 1) # Every user must have at least one role

if math.isclose(f_matrix[u, p], 0) and math.isclose(access[u, p], 0):

model.Add(assignment[u, r] + definition[r, p] <= 1) # Constraint (3)

elif math.isclose(f_matrix[u, p], 0) and math.isclose(access[u, p], 1):

model.Add(auxiliary[u, p, r] <= assignment[u, r]) # Constraint (4)

model.Add(auxiliary[u, p, r] <= definition[r, p]) # Constraint (5)

model.Add(sum(auxiliary[u, p, r] for r in range(NUM_R)) >= 1) # Constraint (6)

elif math.isclose(f_matrix[u, p], 1) and math.isclose(access[u, p], 0):

model.Add(assignment[u, r] + definition[r, p] <= 1 + discrepancy[u, p]) # Constraint (7)

else:

model.Add(assignment[u, r] >= auxiliary[u, p, r]) # Constraint (8)

model.Add(definition[r, p] >= auxiliary[u, p, r]) # Constraint (9)

model.Add(sum(auxiliary[u, p, r] for r in range(NUM_R)) >= 1 - discrepancy[u, p]) # Constraint (10)

Performance of MILP vs CP

Crampton et al. observe that their MILP model is substantially faster than their CP model. Specifically, they say that "the Gurobi solver was 86 times faster than the CP-SAT-solver-based approach".

Our results are more mixed, depending on the choice of solver for our Model 1 MILP. That is, when we use the CPLEX or Gurobi solvers via NEOS Server, Model 1 MILP performs well – it is generally faster than Model 2 CP run locally. But Model 1 MILP works only for small data sets, otherwise the model exceeds NEOS Server's size limits.

We do not have local CPLEX or Gurobi licenses. Instead, running Model 1 MILP with the HiGHS solver locally allows us to run much larger data sets, but HiGHS is significantly slower than CPLEX or Gurobi.

Running Model 2 CP locally is faster than Model 1 MILP with HiGHS. Therefore, we focus on running the models locally, using Model 2 CP. Surprisingly, Model 2 CP also out-performs Crampton et al.'s MILP using Gurobi for one of the data sets, as described below.

Data sets

Our GitHub repository contains several data sets. Here we focus on the Domino, Firewall 2, and Healthcare data sets to test that our model implementations are correct. The data set characteristics are shown in Figure 8. That is:

- Domino. This data set has 79 users and 231 permissions. Only a total of 730 permissions are assigned to the users, so the user-permissions matrix has a low density of 4.0% (=730/(79*231)).

- Firewall 2. This data set is much larger and has moderate density. Large data sets are generally more difficult to solve.

- Healthcare. This data set is small but has a high density, which makes it difficult to prove optimality.

Results: Comparison with Crampton et al.

Perfect fit, with zero discrepancies

Often the objective of a role mining project is to find the minimum number of roles needed to exactly cover the current user-permissions matrix. With our models, this can be done by a trial-and-error search until we find the smallest number of roles NUM_R with zero discrepancies.

The results of following that procedure for each data set and each type of allowed permission changes are shown in Figure 9.

We verify the results of Crampton et al., except for the Availability type with the Firewall 2 data set. Our Model 2 CP finds a solution of 10 roles with zero discrepancies, while Crampton et al. find a solution of 11 roles (their 10 role solution has 37 discrepancies). Crampton et al. allow up to 10,000 seconds for each of their model runs, while we allow a maximum of 3,600 seconds (though all cases take less than the maximum time).

Imperfect fit, Domino

Setting the number of roles to be less than the perfect fit leads to interesting trade-offs. The model makes an approximate fit to the current user-permissions matrix, with non-zero discrepancies. Running our Model 2 CP on the three data sets, and comparing our results with Crampton et al., we get either the same or better results (fewer discrepancies). We present a selection of the possible cases below.

The result for the Domino data set with the Noise \(f_{u,p}\) matrix is shown in Figure 10. Note the vertical log scale. Our results match Crampton et al.'s results in all cases, except that their model proves optimality in more of the cases.

For the 79 users, a minimum of 20 roles is sufficient to perfectly cover the user-permissions. With fewer than 20 roles, some users gain or lose permissions. As the number of roles is reduced, the number of discrepancies rapidly increases (hence the log scale). The choice of an acceptable trade-off between the number of roles and the number of discrepancies will depend on the situation.

Imperfect fit, Healthcare

The result for the Healthcare data set with the Noise \(f_{u,p}\) matrix is shown in Figure 11. Our results match Crampton et al.'s results (including cases proven optimal), except for the case with 8 roles: our best solution has a slightly better solution of 15 discrepancies rather than the 16 reported by Crampton et al.

Imperfect fit, Firewall 2

The Firewall 2 data set with Security \(f_{u,p}\) matrix is more interesting. Some of our solutions, found using Model 2 CP, are substantially better than the solutions found by Crampton et al. using their MILP model. As shown in Figure 12, given a 3,600 second maximum run time, our Model 2 CP finds optimal solutions for all cases, whereas the Crampton et al. model finds optimal solutions in only a few cases with a 10,000 second maximum run time.

Although Crampton et al. observe that their MILP with Gurobi typically performs better than their CP model using CP-SAT, that is not always true in our model runs. Our CP model performs much better with this data set, finding either the same or much better solutions in a shorter time. Therefore, this data set illustrates an important point about optimization modelling in general: model performance is a function of many factors, including formulation, model type, solver, and hardware. Just because a specific model type (e.g. CP or MILP) performs well with one data set does not necessarily mean that it will perform well with a different data set. The only way to be sure about performance is to try solving a model.

Conclusion

In this article, we implement a new formulation for the role mining problem – as recently published by Crampton et al.

Like Crampton et al.'s paper, we build both Constraint Programming (CP) and Mixed Integer Linear Programming (MILP) versions of the model formulation.

We replicate Crampton et al.'s results, though in some cases we obtain better solutions. The better solutions illustrate an important general point about optimization modelling: performance depends on many factors. In this situation, the MILP model using the Gurobi or CPLEX solvers mostly performs better than the CP model using the CP-SAT solver. But, in some cases, the CP model performs better – finding better solutions in a shorter run time.

It is very difficult to predict what type of model will perform best in a specific situation with a given data set. Often, the only way to be sure is to build and run different models, to see which performs best.

If you would like to know more about this model, or you want help with your own models, then please contact us.

References

Crampton J., Eiben E., Gutin G., Karapetyan D., & Majumdar D. (2024) "Bi-objective optimization in role mining", ACM Transactions on Privacy and Security, Volume 28, Issue 1, Article No.: 5, Pages 1 - 22. Preprint