4 November 2023

We continue our article series looking at a common modelling issue: The solution is optimal, but not practical.

In the previous article, we built Model 2 for our paper coverage problem – a linear model that considered a subset of the possible stock paper size combinations. Model 2 found optimal solutions for each case in around 2 seconds, which is an enormous improvement compared with the performance of the non-linear Model 1. However, because we use only a subset of the possible combinations, Model 2's solutions are not globally optimal.

In this article, we extend Model 2 to consider all possible combinations of stock paper sizes. Consequently, Model 3 is quite large, with more than 1 million binary variables. If we can solve this model, then it should produce globally optimal solutions.

Articles in this series

The articles in this "Optimal but not practical" series are:

- First attempt. Model 1: Non-linear model that finds optimal solutions for small data sets, but not for the full data set.

- Linear, partial data set. Model 2: Linearized model using a small sub-set of the full data. Finds local optima.

- Full data set. Model 3: Extends Model 2 to use the full data set. Finds globally optimal solutions, but requires a large amount of memory.

- Column generation. Model 4: Variation of Model 2, with randomly-generated columns. May find globally optimal solutions, though not guaranteed to do so.

- Either/or BigM. Model 5a and 5b: Explicitly models rotation of the items using BigM constraints. Three times larger than Model 3, so difficult to solve.

- Either/or Disjunction. Model 5c: Explicitly models rotation of the items using Pyomo's Generalized Disjunctive Programming (GDP) extension.

- Virtual machine. We set up a Google Compute Engine with 128 GB of RAM to run Model 3 on data sets larger than we can run on our local modelling computer.

Download the models

The models described in this series of articles are built in Python using the Pyomo library.

The files are available on GitHub.

Situation

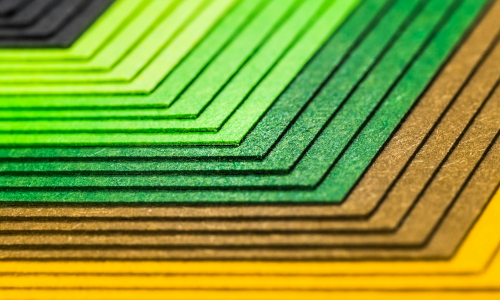

In our paper manufacturing process, we produce 100 unique paper items. Each item is rectangular with known width and length. We also know the annual order quantity for each item.

The items are cut from larger stock paper products. These stock products can be made for us in any size we want. The width and length of a stock product must be greater than or equal to the item size that is cut from it. Our manufacturing process requires that only one item is cut from each stock product. The stated objective is to minimize the waste from trimming the stock products to make items, weighted by the annual quantity of each item.

Given this statement of the situation, the optimal solution is trivial: Have 100 stock products, each of which exactly matches one of the 100 item sizes. This solution is perfect in the sense that it has zero waste. An optimization model with the objective of minimizing waste should find this solution.

However, managing 100 stock products is not practical because it would make the manufacturing process too complex. In practice, we want only a few stock products.

We don't have an objective function that quantifies the trade-off between the number of products and the amount of trim waste that is acceptable. Instead, the trade-off is a qualitative aspect that the decision maker will consider. Consequently, our modelling task is to inform the decision-making process by quantifying what we can. That is:

- We want to use only a few stock products, say between 1 and 10.

- For a given number of stock products, what sizes should they be to minimize weighted trim waste in the production of the items?

- What is the trade-off between the number of stock products and the amount of weighted trim waste?

Note that a heuristic method has already been devised to find a good, though not optimal, solution for six stock products. We'll use the heuristic solution as a benchmark for comparison with our optimization modelling.

Design of Model 3

In building Model 2, we made all the relationships linear, unlike Model 1. We decided to use only a subset of the possible stock paper size combinations – that is, we allowed the model to consider only paper sizes that match the item sizes. As a result, the model has 100 candidate stock product sizes to choose from (plus one more with dimensions that match the maximum width and length of the items, to ensure feasibility). Therefore, Model 2 has 10,201 binary variables. The HiGHS solver finds an optimal solution for each case in around 2 seconds.

Given that the HiGHS solver handles Model 2 easily, we now expand the set of candidate stock paper sizes to include all possible combinations of the item widths and lengths. That is, like Model 2, we pre-compute a set of stock products, each with specified width, length, and area. The model can then use binary variables to allocate those products to the items, ensuring that they fit, while minimizing the total weighted trim waste.

With 100 items, there are 100 * 100 = 10,000 candidate stock products (combinations of item widths and lengths, considered independently). We need a binary variable for each candidate, to decide which candidates to use, which is 10,000 binary variables. We also have a constraint that ensures we use the specified number of stock products for the current case.

Then we need to allocate the candidates to the items. With 10,000 candidates and 100 items, we need 10,000 * 100 = 1,000,000 binary variables. Including the variables for selecting which candidates to use, we have a total of 1,010,000 binary variables.

Since we'll select only a few stock products, and each item is cut from exactly one stock product, almost all the 1 million+ binary variables will be zero. Even so, this is quite a large model.

Formulation for Model 3

The formulation for Model 3 is identical to the formulation for Model 2, except that the list of candidate sizes in Model 3 is larger.

Implementation

Like Models 1 and 2, we implement Model 3 in Python using the Pyomo library. Much of the code is either the same or similar.

The main difference from Model 2 is that we need to generate the list of candidate stock products that has all combinations of item width and length considered independently. We do this by creating Pyomo parameters for the width, length, and area of each candidates using the item widths and lengths, as follows:

Model.Candidate = pyo.Set(initialize = range(0, len(Width) * len(Width)))

# Define candidate product sizes using width and length of items, taking item width and lengths independently to enumerate all combinations

Model.CandidateWidth = pyo.Param(Model.Candidate, within = pyo.NonNegativeIntegers, mutable = True)

Model.CandidateLength = pyo.Param(Model.Candidate, within = pyo.NonNegativeIntegers, mutable = True)

Model.CandidateArea = pyo.Param(Model.Candidate, within = pyo.NonNegativeIntegers, mutable = True)

for i in Model.Item:

for j in Model.Item:

Model.CandidateWidth[i * len(Width) + j] = Width['Item'][i]

Model.CandidateLength[i * len(Width) + j] = Length['Item'][j]

Model.CandidateArea[i * len(Width) + j] = Width['Item'][i] * Length['Item'][j]

Note that, unlike Model 2, we don't need to include an extra candidate to ensure feasibility, because a candidate with the maximum width and length of the items is already included in the fully enumerated list of candidates.

Solutions

Our main concern with Model 3 is that it has more than a million binary variables. It turns out that the HiGHS solver handles this model with ease. Each of the cases (number of stock products from 1 to 10) solves to optimality in a few minutes – from about 5 minutes for the smaller cases, up to about 12 minutes for the larger cases.

Compared with Model 1, which also considered all possible combinations, though using a non-linear formulation, this is a huge improvement. We tried several non-linear solvers on Model 1, with the BARON solver on NEOS Server being the only one that produced any results – though it struggled to get feasible solutions in 8 hours and found optimal solutions only for the smallest cases. The difference in performance between the non-linear Model 1 and the linear Model 3 shows the potential gain that may be achieved by linearization of a model's formulation.

The solutions produced by Model 3 are shown on Figure 2, along with the solutions found by Models 1 and 2, plus the heuristic solution for the case with 6 stock products.

The solutions for Model 3 are only a modest improvement relative to Model 2. For example, the weighted trim loss for 6 stock products is 1.68% compared with 1.99% for Model 2. So, it turns out that using only a small (1%) subset of the possible size combinations is very nearly as good as using all the combinations. Relative to the 5.33% weighted trim waste for the heuristic solution, Model 3's optimal solution reduces waste by more than two-thirds, which is certainly worthwhile.

It is interesting to note that we need only a few stock products to get close to the perfect, but impractical, solution of 100 stock products. For example, using 10 stock products produces weighted trim waste of just over 0.5%, compared with 0% trim waste for 100 stock products. Therefore, a practical solution, using only a few stock products, is also a reasonably efficient solution.

HiGHS vs CBC

We were concerned about Model 3 having over a million binary variables – which is why we first built Model 2, using a subset of the candidates. But it turns out that the HiGHS solver solves Model 3 to optimality in a few minutes. Impressive.

For comparison, we also solved Model 3, with 6 stock products, using the CBC solver. CBC took 94 minutes to find an optimal solution, compared with 8 minutes using HiGHS for the same case. This is consistent with our experience of solver performance for a variety of models – for almost all models, the HiGHS solver is substantially faster than CBC.

Therefore, for linear models, we now use, and recommend, the HiGHS solver in preference to the CBC solver.

Note that Model 3 is too large to solve on NEOS Server, so we are unable to test how fast commercial solvers like CPLEX or Gurobi would solve the model. It is almost certain that the commercial solvers would be faster, but the open-source HiGHS solver is sufficiently fast in this situation that the potential gain from using commercial solvers is not material.

Next steps

At this point, we've solved the original problem. We have a collection of optimal solutions for each of the cases. These solutions fulfil the quantitative objective of minimizing trim waste, as well as the qualitative objective of using "only a few" stock product sizes. There isn't a uniquely obvious best solution – that depends on the decision maker's preference for fewer stock products versus the total weighted trim waste of each case. However, the decision maker can now consider the practical trade-off between trim waste and number of stock products, enabling them to make an informed decision about which solution they prefer for their situation.

But from a modelling perspective, there is more that we can learn. Model 3 works well with the 100 items in the given situation. But what if we have more items? The number of variables in Model 3 grows very quickly – at approximately the cube of the number of items. For example, 100 items require about 1 million variables; but 200 items would require about 8 million variables. HiGHS uses up to 7.5 GB of RAM while solving Model 3 with 100 items. If memory usage is even close to proportional (it could be more or less than proportional), then HiGHS would likely need more memory than our computer has. Even if we had enough memory, the solve time may also increase substantially. Therefore, increasing the number of items will, eventually, make Model 3 unsolvable.

So, in the next article, we'll look at how we can modify Model 3 to solve a larger number of items.

Conclusion

In this article we solve our linearized model using the fully enumerated data set of all item size combinations. Model 3 finds globally optimal solutions for all cases and, using the HiGHS solver, solves to optimality in a few minutes. So, we've solved the problem and provided information that the decision maker can use to decide how to proceed.

But we can extend our modelling in interesting ways. In the next article, we'll modify Model 3 to find solutions for data sets that otherwise would be too large to solve.

If you would like to know more about this model, or you want help with your own models, then please contact us.

References

This series of articles addresses a question asked on Reddit:

Require improved & optimized solution, 14 September 2023.