11 November 2023

We continue our article series looking at a common modelling issue: The solution is optimal, but not practical.

In the previous article, we built Model 3 for our paper coverage problem – a linear model that enumerates all possible stock paper size combinations, then chooses the sizes that minimize trim waste. Model 3 finds globally optimal solutions for each case, so we've solved the original problem. But, from a modelling perspective, there's more we can learn from this situation.

An issue with Model 3 is that it is close to the maximum size we can solve, due to memory usage. This is somewhat unusual – normally, we're concerned about the time to solve a model, but for this model the concern is the amount of memory it needs. Specifically, the number of variables and the amount of memory used both increase with the cube of the number of items. For example, an instance with 100 items has more than 1,000,000 variables, and the HiGHS solver uses up to 7.5 GB of RAM. If we double the number of items, to 200, then the model would have more than 8,000,000 variables and require more RAM than our PC has (we tried).

So, if we can't use Model 3 with a larger data set, then what can we do? Model 2 found good, though mostly sub-optimal, solutions by considering only a 1% subset of the possible stock product combinations. In this article, we develop Model 4 which extends Model 2 by adding randomly generated stock product candidates. The idea is that the solver might be able to find better solutions using a larger subset of candidates, without needing to consider every possible candidate simultaneously.

Our approach for Model 4 is a type of random column generation technique. Strictly speaking, we're not doing standard column generation, which uses a sub-model to decide which columns to add. Instead, we randomly generate columns, solve the model, then repeat some number of times while noting the best solution found. This approach isn't guaranteed to find globally optimal solutions, but it should get close.

Articles in this series

The articles in this "Optimal but not practical" series are:

- First attempt. Model 1: Non-linear model that finds optimal solutions for small data sets, but not for the full data set.

- Linear, partial data set. Model 2: Linearized model using a small sub-set of the full data. Finds local optima.

- Full data set. Model 3: Extends Model 2 to use the full data set. Finds globally optimal solutions, but requires a large amount of memory.

- Column generation. Model 4: Variation of Model 2, with randomly-generated columns. May find globally optimal solutions, though not guaranteed to do so.

- Either/or BigM. Model 5a and 5b: Explicitly models rotation of the items using BigM constraints. Three times larger than Model 3, so difficult to solve.

- Either/or Disjunction. Model 5c: Explicitly models rotation of the items using Pyomo's Generalized Disjunctive Programming (GDP) extension.

- Virtual machine. We set up a Google Compute Engine with 128 GB of RAM to run Model 3 on data sets larger than we can run on our local modelling computer.

Download the models

The models described in this series of articles are built in Python using the Pyomo library.

The files are available on GitHub.

Situation

The situation is the same as for the previous models in this series.

Column generation

Column generation is a technique used to solve models that would otherwise be too large to solve – usually because of the run time or, like our situation, because of the model's memory requirements.

The idea is to start with a small subset of the possible variables (columns in the constraints), then iterate by adding more variables, a few at a time, and re-solving the model. Assuming most of the variables will be zero in an optimal solution, we don't need most of the variables, so hopefully we can find an optimal (or, at least, good) solution without needing to consider most of the variables.

Our model is well-suited to the column generation technique. Normally, column generation would use a sub-model to decide which variables to add at each iteration. The sub-model would use the main model's shadow prices to pick variables that are likely to be favourable. But given that Model 2 already finds good solutions, we can just add some random variables (representing stock product candidates), and then see what happens when we re-solve. This approach is both simpler and faster than implementing the usual column generation technique.

Design of Model 4

Model 4 extends Model 2 with the addition of two features:

- Extra candidates. In addition to the subset of candidates used in Model 2, we randomly generate a specified number of candidate sizes. Each random candidate is created from the item sizes, considering their widths and lengths independently.

- Iterate over the model. We run the model a specified number of times, creating a sample of solutions. We note the best solution found so far, as we iterate the model over the initial plus randomly-generated candidates.

A beneficial side-effect of iterating the model with random candidates is that we get a variety of alternative solutions. In general, having additional solutions can be useful as we might prefer some of those solutions instead of the optimal solution, for reasons that are not encoded in the model.

Having extra candidates, and/or more iterations, increases the odds of finding better, perhaps globally optimal, solutions. Though more candidates increase the memory requirements and run time, while more iterations increases the run time – so we have a trade-off between the number of extra candidates and the time/memory requirements.

A further extension would be to retain the locally optimal sizes from one iteration to the next, as those candidates are more likely to be part of a globally optimal solution. This would be closer to the standard column generation method. As we'll see below, this extension isn't necessary.

Formulation for Model 4

The formulation for Model 4 is the same as for Model 2. The only difference is that we have data that includes randomly generated candidate stock products.

Implementation

Much of the code for Model 4 is the same as for the previous models – that's why we divided the models into modules, to allow us to reuse code.

The main differences from previous models are that we need to generate the random candidates and then iterate over the model some number of times. We also simplify the model's output to be just one line per iteration.

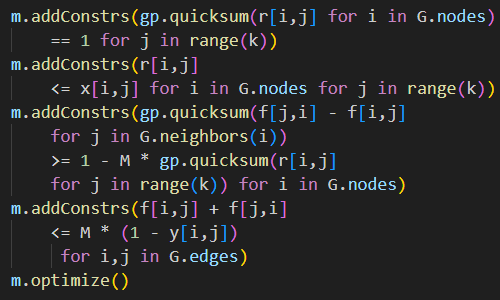

Generating the extra random candidates is straightforward. We define the Candidate set's length to equal the number of items, plus one for a catchall feasibility candidate, plus the number of extra random candidates. Then we generate the random candidates, sampling from the item dimensions:

Model.Candidate = pyo.Set(initialize = range(0, len(Width) + 1 + ExtraCandidates))

# Plus a specified number of extra candidates, with width and length independently chosen from item widths and lengths

WidthOriginal = Model.CandidateWidth

LengthOriginal = Model.CandidateLength

for i in range(0, ExtraCandidates):

Dimension1 = rnd.choice(Width['Item'])

Dimension2 = rnd.choice(Length['Item'])

SortedWidth = max(Dimension1, Dimension2)

SortedLength = min(Dimension1, Dimension2)

Model.CandidateWidth[len(Width) + 1 + i] = SortedWidth # Choose one from original widths

Model.CandidateLength[len(Width) + 1 + i] = SortedLength # Choose one from original lengths

Model.CandidateArea[len(Width) + 1 + i] = Model.CandidateWidth[len(Width) + 1 + i] * Model.CandidateLength[len(Width) + 1 + i]

Note that we sort the chosen width and length for each candidate so that the width is always >= the length. As noted in Model 1, sorting the dimensions mimics rotation of the stock paper. We'll explore this issue more in the next article.

Solutions

Test with 100 items

We test Model 4 by running the 100-item data, for 10 iterations, each having 400 extra random candidates. With a total of 100 + 1 + 400 = 501 candidates in each iteration, the model has just over 50,000 variables. This is substantially smaller than the 10,000 candidates and 1,000,000+ variables that Model 3 uses to represent the same situation, having enumerated all the possible candidates.

A part of Model 4's output is shown in Figure 1. An asterisk indicates the best solution found so far, as the program iterates the model 10 times for each number of products. The sizes are the optimal pairs of width and length. For 2 products, all of the iterations happen to have the same solution – though that is not always the case. For more products, the iterations find a variety of solutions.

Even though Model 4 considers only about 5% of the potential candidates in each iteration, it finds a globally optimal solution for every case (2 to 10 stock products). The run time is around 12 seconds per iteration. For example, the globally optimal solutions for 2 to 4 products have trim waste of 7.90%, 4.45%, and 2.99% respectively. Model 4 finds an optimal solution at least once for each number of products – though it is not guaranteed to do so.

Products Objective Waste Sizes

2 275,030 7.90% * 1006 768 862 596

2 275,030 7.90% 862 596 1006 768

2 275,030 7.90% 1006 768 862 596

2 275,030 7.90% 1006 768 862 596

2 275,030 7.90% 862 596 1006 768

2 275,030 7.90% 862 596 1006 768

2 275,030 7.90% 1006 768 862 596

2 275,030 7.90% 862 596 1006 768

2 275,030 7.90% 862 596 1006 768

2 275,030 7.90% 862 596 1006 768

3 171,269 4.92% * 1006 768 930 602 862 596

3 164,974 4.74% * 1006 768 902 598 862 594

3 163,670 4.70% * 1006 768 862 594 902 596

3 154,969 4.45% * 1006 768 902 604 862 596

3 163,670 4.70% 1006 768 862 594 902 596

3 167,670 4.82% 1006 768 942 604 862 596

3 167,670 4.82% 1006 768 942 604 862 596

3 163,886 4.71% 862 596 1006 768 902 624

3 159,216 4.58% 1006 768 902 614 862 596

3 167,670 4.82% 1006 768 862 596 942 604

4 116,845 3.36% * 842 584 862 596 1006 768 942 604

4 113,928 3.27% * 862 596 1006 768 902 596 842 584

4 110,290 3.17% * 842 584 1006 768 902 618 862 596

4 104,144 2.99% * 1006 768 842 584 862 596 902 604

4 104,144 2.99% 1006 768 902 604 862 596 842 584

4 112,261 3.23% 842 584 1006 768 862 596 902 602

4 117,459 3.38% 842 584 1006 768 930 614 862 596

4 104,144 2.99% 842 584 1006 768 862 596 902 604

4 118,791 3.41% 842 584 1006 768 862 596 942 608

4 104,144 2.99% 842 584 1006 768 902 604 862 596

Of course, we know that we've found globally optimal solutions only because we already found those solutions using Model 3 to solve this data. Even so, these results are very encouraging for larger data sets. Because we find globally optimal solutions, we don't need to extend our column generation technique to retain useful columns from previous solutions.

As an aside, looking at the solutions found by Model 4, we note that the model finds alternative optima that have the same sizes but in a different order. As an extension of the model, we could consider adding symmetry-breaking constraints that force a sort order on the sizes to eliminate these alternatives. Such constraints are sometimes useful for speeding up the solve process, though that isn't a concern with this model as it is already fast enough.

Extend to 200 items

Next, we create a data set with 200 items. Because of Model 3's large memory requirement, we are unable to solve 200 items to optimality using Model 3.

Instead, Figure 2 shows the best solutions found by Model 2 and Model 4 for this larger data set. Model 2 uses just the 200 item sizes (plus one large size to ensure feasibility) as candidate stock product sizes. Model 4 uses the same candidates, plus 800 extra random candidates.

We chose 800 as the number of extra candidates simply because it seems large enough to give Model 4 a reasonable chance of finding good (hopefully optimal) solutions, while being small enough that the model solves quickly and without running out of memory. With 200 items and 800 extra candidates, Model 4 has just over 200,000 binary variables. This is only 20% of the variables that we solved successfully using Model 3, so we're solving a larger data set with smaller memory usage.

We know that Model 2 finds good, though mostly sub-optimal, solutions. Model 4's solutions are all better than those found by Model 2 – as they must be, because Model 4 includes all the candidates available to Model 2, plus some extra candidates. We don't know if the Model 4 solutions are globally optimal, though they look to be at least very good solutions. We'll return to this issue, considering optimality for a large data set, in a later article.

Extend to even larger data sets

In this article we've focussed on a data set with 200 items. We did so because it is almost twice the size of the data we can solve to global optimality with Model 3. But the random column generation technique we've used in Model 4 could be applied to even larger data sets.

In the previous article, we demonstrated that the HiGHS solver handles a model that has 1,000,000+ binary variables. With 200 items, Model 4 has 200,000 binary variables. Therefore, we expect that we could find at least good, and perhaps globally optimal, solutions for data sets with even more items.

To test this assertion, we created a data set with 1,000 items. This is 10 times larger than we need, as specified in the original problem situation. With 1,000 items, Model 3 would have more than 1,0003 = 1 billion variables and need several terabytes of RAM to solve. In comparison, Model 4, with 1,000 items and 500 extra candidates, has "only" 1.5 million variables and used 18 GB of RAM to successfully find at least locally optimal solutions with a run time of 14 minutes per iteration. Although we can't guarantee that Model 4's solutions are globally optimal, the trade-off of being able to find solutions at all seems well worthwhile.

Next steps

In designing our models, we made the assertion that sorting the data to have width >= length for each candidate stock product is equivalent to rotating the stock products. It may not be obvious that this is true.

Therefore, in the next article, we revise the model to explicitly represent rotation via either/or constraints. That is, we have pairs of constraints, representing landscape and portrait rotation, where we want only one of each pair to be active at a time. All going well, we'll demonstrate that (in this situation) sorting the data has the same effect as using either/or constraints to represent rotation of the stock products.

Conclusion

In this article we extend Model 2 to handle large data sets via a random column generation technique. Model 4 enables us to find good, perhaps optimal, solutions for data sets that are orders of magnitude too large to solve using Model 3. This technique, which is fast and effective, can potentially be applied to a wide range of situations where a model is unsolvable due to its large size.

In the next article, we'll look at introducing either/or constraints into our model, to explicitly represent rotation of the stock paper sizes.

If you would like to know more about this model, or you want help with your own models, then please contact us.

References

This series of articles addresses a question asked on Reddit:

Require improved & optimized solution, 14 September 2023.