24 October 2023

We continue our article series looking at a common modelling issue: The solution is optimal, but not practical.

In the previous article, we identified a theoretically perfect solution that is impractical to implement due to its complexity. We then developed a non-linear model of the situation, limited to just a few stock product sizes, but that model was difficult to solve.

In this article, we formulate and implement a linear model, with the hope that it will be easier to solve. We focus on considering only a partial set of potential solutions, as a step towards a full linear model.

Articles in this series

The articles in this "Optimal but not practical" series are:

- First attempt. Model 1: Non-linear model that finds optimal solutions for small data sets, but not for the full data set.

- Linear, partial data set. Model 2: Linearized model using a small sub-set of the full data. Finds local optima.

- Full data set. Model 3: Extends Model 2 to use the full data set. Finds globally optimal solutions, but requires a large amount of memory.

- Column generation. Model 4: Variation of Model 2, with randomly-generated columns. May find globally optimal solutions, though not guaranteed to do so.

- Either/or BigM. Model 5a and 5b: Explicitly models rotation of the items using BigM constraints. Three times larger than Model 3, so difficult to solve.

- Either/or Disjunction. Model 5c: Explicitly models rotation of the items using Pyomo's Generalized Disjunctive Programming (GDP) extension.

- Virtual machine. We set up a Google Compute Engine with 128 GB of RAM to run Model 3 on data sets larger than we can run on our local modelling computer.

Download the models

The models described in this series of articles are built in Python using the Pyomo library.

The files are available on GitHub.

Situation

In our paper manufacturing process, we produce 100 unique paper items. Each item is rectangular with known width and length. We also know the annual order quantity for each item.

The items are cut from larger stock paper products. These stock products can be made for us in any size we want. The width and length of a stock product must be greater than or equal to the item size that is cut from it. Our manufacturing process requires that only one item is cut from each stock product. The stated objective is to minimize the waste from trimming the stock products to make items, weighted by the annual quantity of each item.

Given this statement of the situation, the optimal solution is trivial: Have 100 stock products, each of which exactly matches one of the 100 item sizes. This solution is perfect in the sense that it has zero waste. An optimization model with the objective of minimizing waste should find this solution.

However, managing 100 stock products is not practical because it would make the manufacturing process too complex. In practice, we want only a few stock products.

We don't have an objective function that quantifies the trade-off between the number of products and the amount of trim waste that is acceptable. Instead, the trade-off is a qualitative aspect that the decision maker will consider. Consequently, our modelling task is to inform the decision making process by quantifying what we can. That is:

- We want to use only a few stock products, say between 1 and 10.

- For a given number of stock products, what sizes should they be to minimize weighted trim waste in the production of the items?

- What is the trade-off between the number of stock products and the amount of weighted trim waste?

Note that a heuristic method has already been devised to find a good, though not optimal, solution for six stock products. We'll use the heuristic solution as a benchmark for comparison with our optimization modelling.

Design of Model 2

One of the issues with Model 1 is that we are asking it to do too much. That is, it has to decide the stock product widths and lengths (independently selected from lists using binary variables), allocated those products to the items, then calculate weighted areas given those widths and lengths. The resulting functions are awkwardly non-linear.

An alternative approach is to pre-compute a set of stock products, each with specified width, length, and area. The model can then use binary variables to allocate those products to the items, ensuring that they fit. The resulting functions are linear as, unlike Model 1, the width, length, and area are data rather than variables. This approach is conceptually similar to having a model choose from pre-defined cutting patterns or selecting from a list of enumerated shifts when making a schedule.

At this point, we worry about the number of variables that our model will have. We need to consider all combinations of item width and length when deciding the stock product sizes. We'll call these combinations "candidate" products. With 100 items, there are 100 * 100 = 10,000 candidates (combinations of item widths and lengths, considered independently). We'll need a binary variable for each candidate, to decide which candidates to use, which is 10,000 binary variables. That should be OK.

But then we need to allocate the candidates to the items. With 10,000 candidates and 100 items, we'll need 10,000 * 100 = 1,000,000 binary variables. Including the variables for selecting which candidates to use, we'll have a total of 1,010,000 binary variables. Since we'll select only a few stock products, almost all of the 1 million+ binary variables will be zero, so maybe it will be OK. Even so, that is a lot of variables.

Rather than jumping straight to a model with a million variables, as an intermediate step we'll restrict the list of candidate stock products to be the same as the list of item sizes. That is, have 100 candidates instead of 10,000 candidates. Then we'll have 100 + (100 * 100) = 10,100 binary variables. That should be much more manageable for the solver. The trade-off is that our partial set of candidate stock products almost certainly excludes at least some of the best size combinations. Therefore, in most cases it is unlikely that the optimal solution to this partial model is an optimal solution for the full problem.

Formulation for Model 2

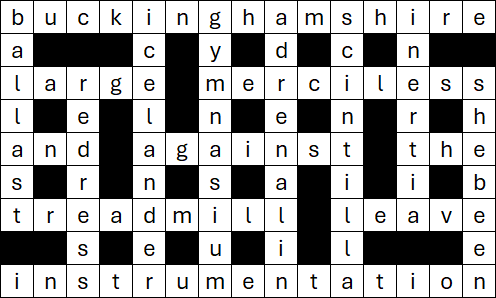

Having decided to restrict Model 2 to using a partial set of candidate stock product sizes, the model formulation is straightforward – as shown in Figure 1.

Key features of the formulation for Model 2 are:

- Equation (1). Our objective is to minimize the trim loss, which is the weighted area of the stock products allocated to each item minus the weighted area of the items. Unlike Model 1, this objective function is linear because we've pre-calculated the candidate stock product areas as data.

- Equation (2) says that the width of an allocated candidate stock product must be at least the width of each item it is allocated to. Equation (3) is the same, except for the length.

- Equation (4) says that we must select the specified number of stock products. In Model 1, the number of selected stock products was implicit in the data set, whereas in Model 2 we have to explicitly select the specified number.

- Equation (5) says that we can allocate a candidate stock product to an item only if that candidate has been selected.

- Equation (6) says that each item is allocated to exactly one candidate stock product.

The constraints are also linear. In addition, this formulation is significantly simpler than Model 1. Even though we have more than 10,000 binary variables, Model 2 should be fairly easy to solve.

Implementation

Like Model 1, we implement Model 2 in Python. Much of the code is either the same or similar.

The main difference, other than the formulation, is that we need to generate the list of candidate stock products. We do this by creating Pyomo parameters for the width, length, and area of each candidates using the item widths and lengths, as follows:

Model.Candidate = pyo.Set(initialize = range(0, len(Width) + 1))

# Define candidate product sizes using width and length of items

Model.CandidateWidth = pyo.Param(Model.Candidate, within = pyo.NonNegativeIntegers, mutable = True)

Model.CandidateLength = pyo.Param(Model.Candidate, within = pyo.NonNegativeIntegers, mutable = True)

Model.CandidateArea = pyo.Param(Model.Candidate, within = pyo.NonNegativeIntegers, mutable = True)

for i in Model.Item:

Model.CandidateWidth[i] = Width['Item'][i]

Model.CandidateLength[i] = Length['Item'][i]

Model.CandidateArea[i] = Width['Item'][i] * Length['Item'][i]

Note that we define the candidate Set to be one longer than the list of items. This is because selecting a few of the item sizes to cover all of the items might not be feasible. Looking back to the 5 item example in Model 1, there are no two item sizes that cover all items. Specifically, the 4th item is not wide enough to cover the width of the 5th item, and the 5th item is not long enough to cover the length of the 4th item. Therefore, to ensure feasibility, we need to add an extra candidate, with dimensions equal to the maximum width and maximum length of the items. We hope to not use this extra candidate because it is, by definition, large – but it might be necessary. We create this extra candidate as follows:

# Extra candidate to ensure feasibility. Dimensions will depend on how the data is sorted

MaxWidth = 0

MaxLength = 0

for i in Model.Item:

MaxWidth = max(MaxWidth, Width['Item'][i])

MaxLength = max(MaxLength, Length['Item'][i])

Model.CandidateWidth[len(Width)] = max(MaxWidth, MaxLength) # We take the max because we want the sizes sorted so that width >= length

Model.CandidateLength[len(Width)] = min(MaxWidth, MaxLength) # Conversely, we take the min because we want length <= width

Model.CandidateArea[len(Width)] = MaxWidth * MaxLength

This extra candidate adds another 101 binary variables, so now we have 10,201 variables, but that's OK.

Solutions

Model 2 is linear, so we use the HiGHS solver, which is installed locally. HiGHS solves each of the cases to optimality in about 2 seconds. This is a vast improvement compared with Model 1, where the solvers struggled to find feasible solutions in eight hours.

Note that we have made a compromise, with Model 2 finding optimal solutions to a partial set of candidates, while Model 1 attempts to find globally optimal solutions. Even so, the solutions for Model 2 are significantly better than for Model 1, as shown in Figure 2 (except for three stock products, where the solution for Model 1 is slightly better than the solution for Model 2). Note that, compared with the previous article, we've narrowed the chart's y-axis range to focus on the solutions for Model 2.

For the case with six stock products, Model 2's solution has 2.0% trim waste compared with 6.2% for Model 1 and 5.3% for the heuristic. For the cases with seven to ten stock products, we now have greatly improved solutions, ranging from 1.5% to 0.6% trim waste.

We note that for the case with six stock products, 12 of the items are allocated to the extra candidate that is defined as the maximum dimensions of the items. This suggests that it might be possible to further improve the solution if we have a larger range of candidate sizes to choose from. The fact that Model 1 found a slightly better solution for the case with three stock products reinforces this assessment. That is, while Model 2's solutions are optimal given the partial set of candidate stock sizes, they are mostly not globally optimal.

Next steps

Model 2 works well. It solves quickly and we now have excellent solutions for all cases. While those solutions are optimal for Model 2, they are not necessarily optimal for the original problem (except for the cases with one and two stock products, which are globally optimal, as confirmed by Model 1). Therefore, there is an opportunity to get even better solutions.

In designing Model 2 we decided to restrict the list of candidates to a partial set, so that our model doesn't have a million+ variables. HiGHS solves Model 2 in a couple of seconds, so perhaps it will handle a larger model? There's only one way to find out: In the next article we'll expand the list of candidates to include all possible combinations of item sizes.

Conclusion

In this article we formulate a linear model for our trim loss minimization situation. To keep the model size small, we limited the list of candidate product sizes to a partial subset of the possible combinations.

Model 2 is linear and works very well. It finds excellent solutions for all of the cases, though most of the solutions are not globally optimal.

In the next article, we'll greatly expand the list of candidate stock products in the hope of finding globally optimal solutions for each case. If, that is, the model isn't too large to solve.

If you would like to know more about this model, or you want help with your own models, then please contact us.

References

This series of articles addresses a question asked on Reddit:

Require improved & optimized solution, 14 September 2023.